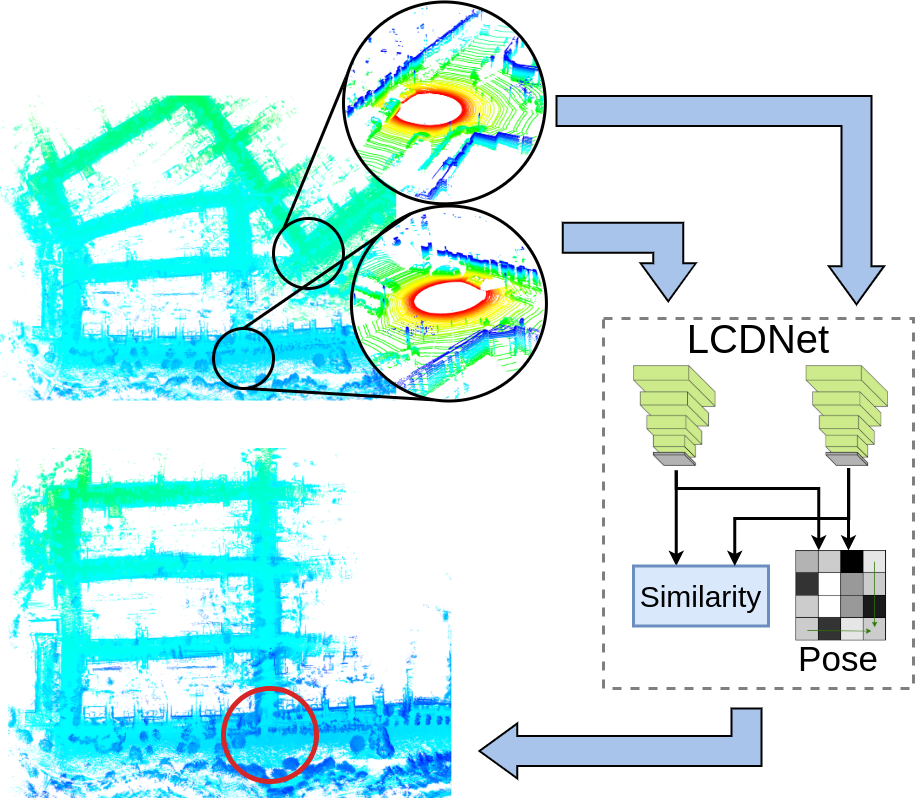

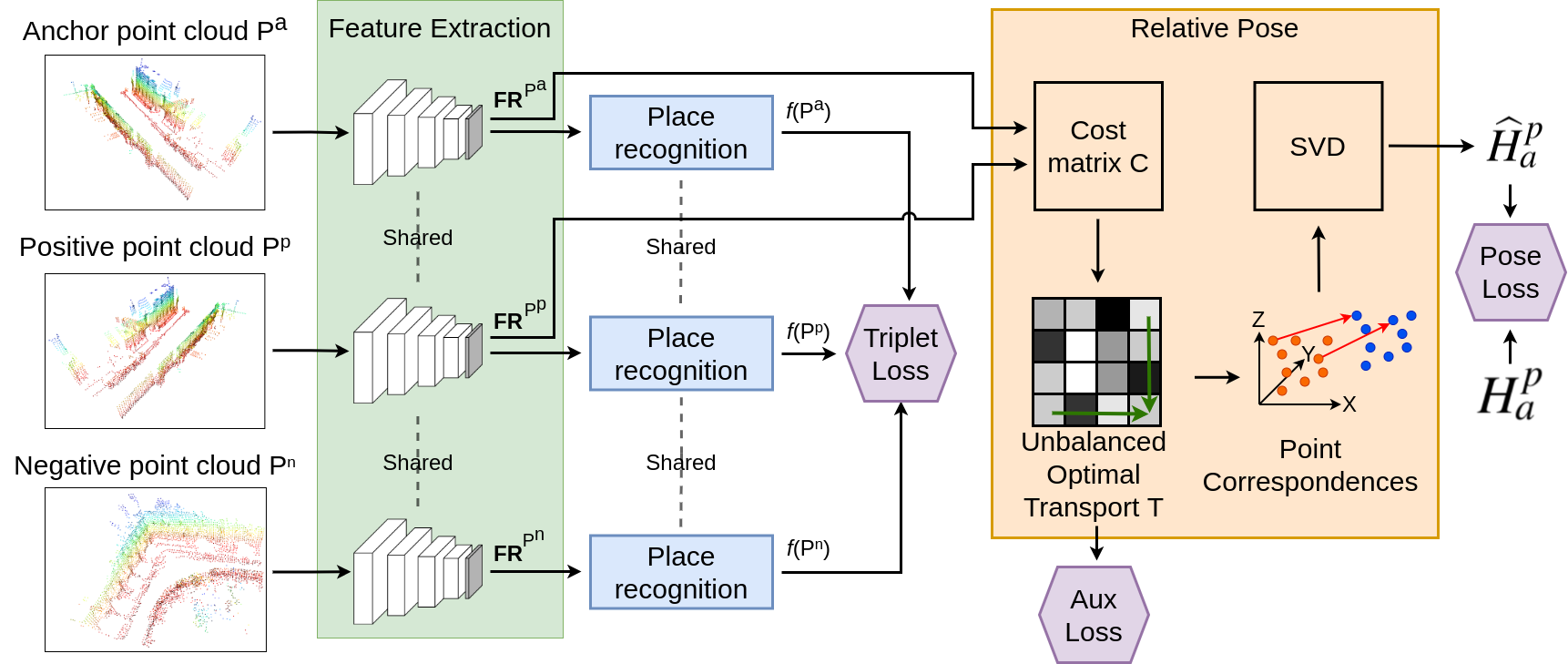

Loop closure detection is an essential component of Simultaneous Localization and Mapping (SLAM) systems, which reduces the drift accumulated over time. Over the years, several deep learning approaches have been proposed to address this task, however their performance has been subpar compared to handcrafted techniques, especially while dealing with reverse loops. In this paper, we introduce the novel LCDNet that effectively detects loop closures in LiDAR point clouds by simultaneously identifying previously visited places and estimating the 6-DoF relative transformation between the current scan and the map. LCDNet is composed of a shared encoder, a place recognition head that extracts global descriptors, and a relative pose head that estimates the transformation between two point clouds. We introduce a novel relative pose head based on the unbalanced optimal transport theory that we implement in a differentiable manner to allow for end-to-end training. Extensive evaluations of LCDNet on multiple real-world autonomous driving datasets show that our approach outperforms state-of-the-art techniques by a large margin even while dealing with reverse loops. Moreover, we integrate our proposed loop closure detection approach into a LiDAR SLAM library to provide a complete mapping system and demonstrate the generalization ability using different sensor setup in an unseen city.

LCDNet: Deep Loop Closure Detection and Point Cloud Registration for LiDAR SLAM

Daniele Cattaneo, Matteo Vaghi, Abhinav Valada